RUM for a small race-car website: Trying out SpeedCurve, RUMvision, mPulse

Morgan Murrah

This Real User Monitoring (RUM) journey all started because this website is not likely to ever make it to the CrUX report. The logic for excluding small websites from the CrUX report makes sense: it must be publicly discoverable and there must be a large enough number of visitors in order to create a statistically significant dataset. Not every website can be included.

Still, I felt like I was missing out so I went looking for RUM solutions for the ’little guy’ i.e Morganwebdev.org who was not sufficiently popular. This led me trying three different services as a trial user and this post is my informal recap of my experience.

Previewing a future tool in a small context

This website serves a useful purpose for me as a way of networking and testing some of my skills. I guess it seemed worthwhile to try several products on a quest for some information about what RUM is like on it in case I needed it in my future career.

I became particularly interested in RUM for future use in my career based on the idea of tracking user performance before even CrUX data would be released for a website that would qualify for CrUX. In my idea, it would be helpful to know about what real users are experiencing to enable quicker fixes rather than waiting for CrUX.

I started talking about wanting to try RUM on the web performance slack, got in touch with Cliff Crocker, Erwin Hofman and Bluesmoon and they all generously set me up with trial usage of the products they are working on. I ended up trying out three providers: SpeedCurve, RUMvision, mPulse.

This review is mostly about my UX impression of the products, not super number focused on metrics. I judged my UX experience mainly how I felt logging in and using the services.

My situation was limited because it was a tiny site that largely had no performance issues of note. Not a huge amount of traffic and statically built. I thought of it as something like RUM for a small race car.

Summary;

Return experience overall winner:

SpeedCurve 🏆🏆🏆

I felt like coming back to using SpeedCurve more, and it seemed to have a sturdy feeling to it. Something about the focused design and aesthetic appealed to me the most. I used it the most and settled on it.

Fresh experience award:

RUMvision 🏆🏆

Newer feeling UI or a little more modern, a little more pop, and some great features coming through, but not quite as sturdy on return visit when I felt I returned to it. Somehow I feel I didn’t quite engage as much with the numbers or graphs.

Industrial strength experience award:

mPulse 🏆🏆

Lots of dashboard power! I only touched the surface but it seemed bigger than what I was going for.

Background to using the tools:

I would describe myself as journeyman level web developer with an interest in web performance with an ability to:

- Run the tests

- Investigate issues

- Enable solutions

How long it may take me to figure out a certain thing varies but Ive had a pretty good run for a few years of doing related skills at work. When I think of performance in practical terms I first think of things like:

- Removing unnecessary stuff

- Prioritizing delivering initial HTML and CSS over JS

- Organizing your HEAD element well

- Appropriately sized images

- Know when to seek out expertise

Installing all three tracking scripts

At one time I was running all 3 RUM solutions at the same time. Installation for me felt as easy as anything web dev- I got given snippets of code and put them in the head element of my website and published it. I didnt get granular enough to see if the order of the 3 snippets would affect the results somehow.

My onboarding experience as a trial user was also a little bespoke and colored my experience. SpeedCurve set me up with a usual login and then just adjusted my plan- then left me to it with their docs and they were great. Erwin Hofman from RUMvision set up my domain for me and I just had to put the snippet on my website and it started populating some results. mPulse required some specific setup by bluesmoon that we debugged a little.

Overall, it was very smooth getting started with all three services. Each time I added the script content, published my site and then shared my website to forums and slack workspaces I frequent. I saw traffic that seemed likely and plausible in the dashboards and metrics in all three.

In terms of data I am light on numbers but I had something like 1500 RUM Sessions in Speedcurve over approximately a year. I had 653 hits in RUMvision since Christmas over about 7 months. I had comparable numbers in mPulse which I tried for a few months. Enough to plot a trendline it felt like but not a huge amount and definitely I acknowledge the small sample size of this experiment.

Race Car Problems

This website is statically generated with minimal assets and resources. The biggest issue I found with all three RUM results is that this website was almost too fast and hard to detect anything to fix. In the lack of obvious problems to fix, I mainly thought about how the three services made me feel.

On all three platforms I was regularly getting good synthetic scores that did not vary a whole lot. I found that it was not easy to line up all three for side by side comparisons at time points, at least, it made more effort than I was willing to try.

For my experiment, my returning visitor experience was key.

I would forget about the RUM on my website for days or weeks and then I would return to see how it was going. I had the repeated experience of wondering “I wonder how the RUM is going” and then proceeding to login to all three and tinkering around with them.

Something about the SpeedCurve UI feels sharp, focused, and oriented towards this repeat visitor. I feel like I could forget about this and return to it when needed and it would have done a lot of the work for me.

I explored most all menus and options and found what I needed with low friction. I experienced a regression on one page I examined, as I had used a significantly oversized image, and I was able to see that result from synthetic testing after deployment within SpeedCurve before I really saw it in the RUM.

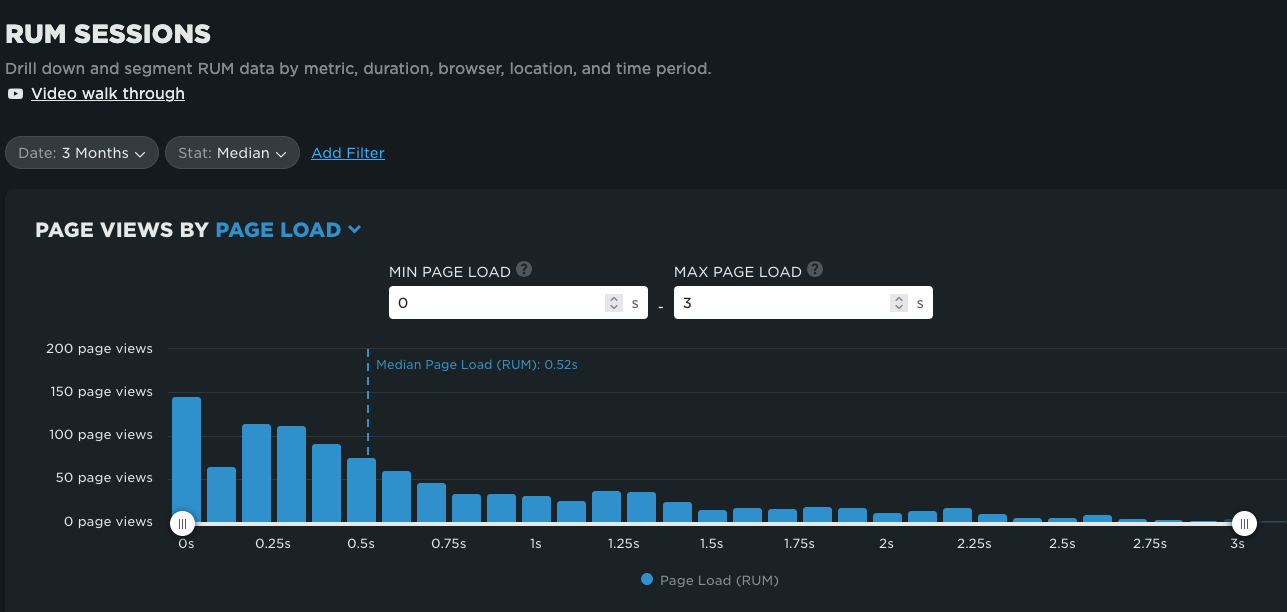

I like the Page load curve that shows up as the first visual. You see the tail that gives the overall experience and potentially hints towards problems in a very quick and not overwhelming view. Something about this view perhaps made me like it the most:

Email options about performance budgets seemed effective. Getting notifications of regressions seemed like a great practice and I liked the way SpeedCurve handled email. Their newsletter got me in to learning more about their product.

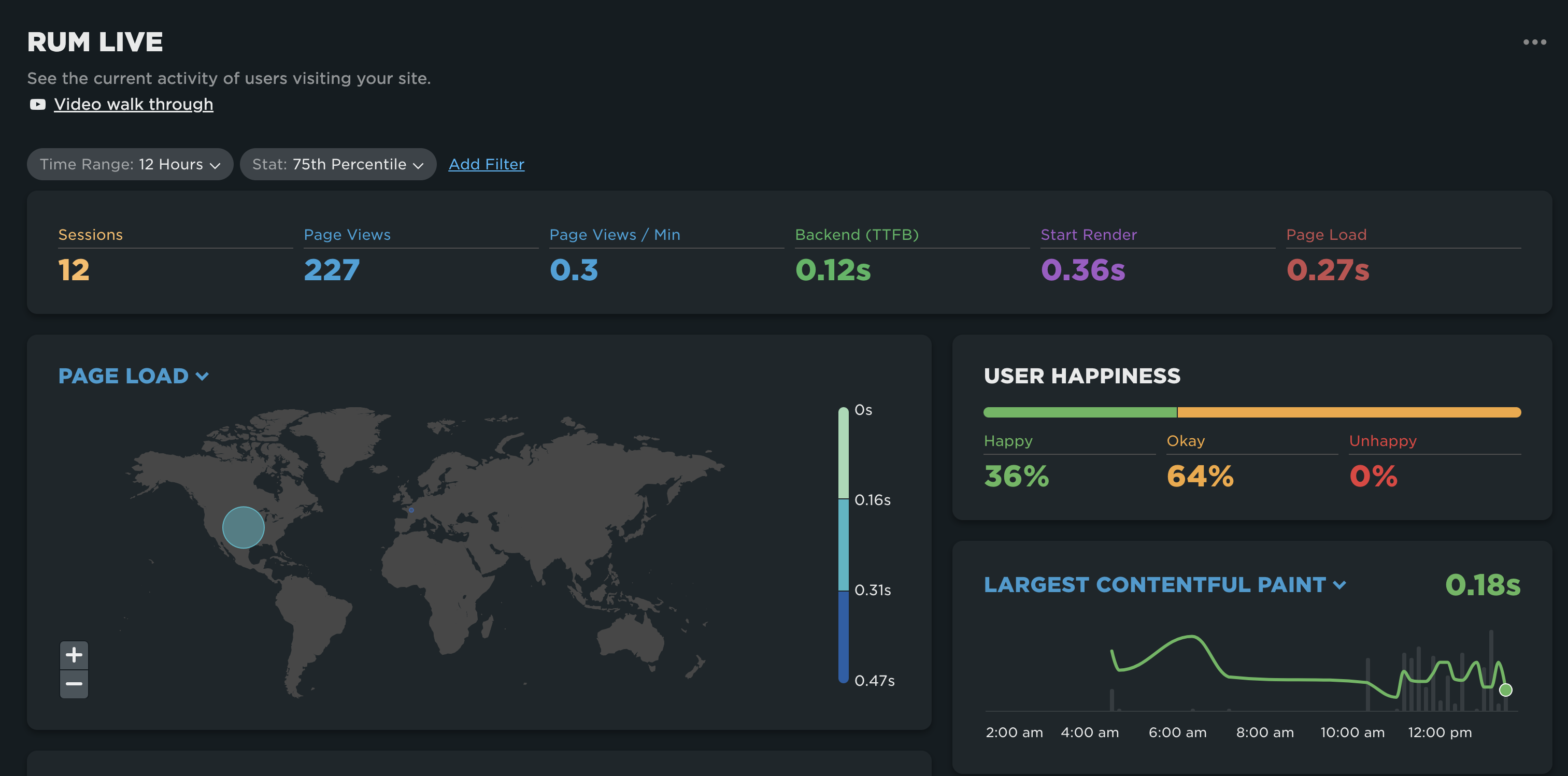

RUMvision: A fresh feeling

RUMvision has a fresh UI and clean feeling. It was very apparent feeling that it was being optimized and expanded by vibrant people.

One thing it introduced to me was tracking prerendering. I had set up Speculation Rules on my website and I was able to confirm some real users were experiencing them and not just myself at home on my chrome browser. That was a cool moment with my experience.

Baseline integration feature looks cool!

I like this product even though it was not my favorite of the three. I imagined myself setting up marketing sites with this for launches- I would use it again.

mPulse: A foundry, gritty feeling

mPulse had a incredibly cool visual representation of RUM data called an aggregated resource waterfall with many options and configurations. A new UI was being tested while I used it. Somehow not as good return experience- for the purposes of my little experiment it felt too big for me as a little guy.

mPulse felt like something I would use at work and for the purposes of my experiment maybe it got a little disadvantaged just because it was the last one I tried in my RUM journey. Something about trying it made me think I had exhausted my search.

At this point, I had felt maybe I had enough RUM in my life for now.

Overall conclusions on the quest for small RUM for a race-car website:

After trying all three and tinkering with them over a few months, I was ready to downsize and consolidate.

Learning about RUM tools seems worthwhile if you have a specific interest in potentially adopting one at work. I got a lot out of being a trial user of three and I hope I have written this in a way thats somewhat helpful to the vendors who gave me a shot in my quest for the small RUM experience.

All of them seemed suited with different strengths. In my circumstances of the trial SpeedCurve won on the basis of the return experience. I felt like I could keep coming back to it to see how my little website is doing and kept it.